The following is material excerpted, adapted, and annotated from Mark Graban’s book Lean Hospitals: Improving Quality, Patient Safety, and Employee Engagement.

The terms “mistake-proofing” and “error-proofing” can be used interchangeably, as John Grout says… mistake and error are basically synonyms.

Creating Quality at the Source through Error-Proofing

Ensuring quality at the source (the Japanese word jidoka), through detecting and preventing errors, is one of the pillars of the Toyota Production System.[i] The history of jidoka dates to the time before the company even built cars. Sakichi Toyoda invented a weaving loom that automatically stopped when the thread broke; this invention saved time, increased productivity, and reduced the waste of defective fabric.[ii] The automatic weaving loom and that level of built-in quality have served as an inspiration throughout Toyota and among adopters of the Lean approach.

Being Careful Is Not Enough

When managers blame individuals for errors, there is an underlying—but unrealistic—assumption that errors could be prevented if people would just be more careful. Errors are often viewed as the result of careless or inattentive employees. Warning, caution, or “be careful” signs throughout our hospitals are evidence of this mindset. If signs or memos were enough, we would have already solved our quality and safety, problems not only in hospitals but also in the world around us.

Read more about “Be More Careful”

A good exercise is to go on a “gemba walk” through a department, looking for signs that remind or tell employees to be careful. Each sign is an indicator of a process problem and evidence that the root cause of the problem has not been properly addressed through error-proofing. A good metric for the “Leanness” of a hospital might be the number of warning signs—fewer warning signs indicate that problems have been solved and prevented, eliminating the need for less effective signs and warnings.

For example, we might see a sign in the pharmacy that reads:

“Please remember fridge items for cart delivery.”

This suggests that this process error was made at least once. We need to ask why it was possible to forget the refrigerated items. Does the department not have a standard checklist that is used before going out to make a delivery? Is the refrigerator in an inconvenient location, making it easy to forget about when employees are in a rush?

One hospital laboratory had two analyzers that performed similar testing. For one analyzer, five specimen tubes would get loaded into a plastic rack, which was then inserted into the analyzer. The newer analyzer had a warning from the manufacturer printed on the loading chute that stated,

“CAUTION: When setting the stat sample rack, confirm the direction of the rack.”

When asked how often a rack was inserted incorrectly, facing the wrong way, the lab supervisor said it happened quite frequently, causing the rack to jam and not load properly, delaying test results and taking up time. The older analyzer, used as a backup, was physically error-proofed, making it impossible to load the rack in a backward orientation; it was a far more effective approach than the warning sign on the other.

Nurses’ stations might be full of signs, such as “Avoid overtightening syringes in microbore tubing.” In this case, a new type of tubing was being purchased by the hospital, tubing for which the connector had “wings” that made it too easy for nurses to get too much leverage into the twisting motion. Instead of asking nurses to be careful, a root cause might point more to the design of the tubing or the purchasing decision of the hospital. Too many signs can, in and of themselves, present a safety hazard. If each bears a truly important admonition, the worker can develop what pilots call “alarm fatigue,” with too much confusing dialogue going on around them.

Of course, we would not want just to go around taking down signs without first preventing the problem. Signs may be, at best, a short-term response (or a symptom of a problem) that can eventually be replaced with a root cause fix.

Why 100% Inspection Is Not 100% Effective

Healthcare organizations tend to rely on inspection and double checks, for which the focus is on finding problems before they reach the patient. Error prevention is preferable to inspection for a number of reasons. First, if we rely on people to inspect their own work or work done by others, we introduce the risk of human error into the process. At the end of a long day, a person might forget to do a particular inspection or check, a problem that we could address by rotating jobs frequently to keep people mentally alert. Or, we can rely on checklists, similar to what an airline pilot uses before every flight, as mentioned in Chapter 5. Asking people not to get fatigued is unrealistic and violates Lean’s core “respect for people” principle.

Adding extra inspections is not guaranteed to prevent surgical errors, as evidenced by Rhode Island Hospital, which was in the news for having performed four wrong-site brain surgeries over 6 years.[iii] After an incident in July 2007, the hospital was ordered to add a second physician to review the proper site before surgery (a form of inspection). Even so, another wrong-site surgery occurred in August 2007, leading to yet another review. The hospital is now requiring an attending physician to be present for brain surgery procedures since the last error was committed by a resident. The hospital appears to be blaming residents, saying they are incapable of working unsupervised. The added attending physician is serving partly as an inspector of the resident’s work. It is possible that this inspection may also suffer from the same sort of human error that failed to prevent previous errors that occurred when the first extra inspection was in place. Residents may very well be capable on their own, without errors, if better error-proofing methods and standardized work were in place. Lean thinkers would ask why those errors occurred, looking for the systemic problem that allowed the same error to occur so many times. As further evidence of a systemic problem that was not being solved, the same hospital had a wrong-side surgery on a cleft palate patient in 2009.[iv]

Eric Cropp, a pharmacist in Ohio, was convicted and jailed in 2009 for his role in a medication error that killed a two-year-old girl, Emily Jerry.[v] Cropp was blamed for not finding an error created upstream by a pharmacy technician who was admittedly distracted and violated a standardized work practice. Michael Cohen for the Institution of Safe Medication Practices was among those who spoke out and called Cropp an easy target, saying that healthcare quality and patient safety are better served by focusing on process issues that allow tragedies like this to occur.[vi] Emily’s father Chris Jerry has appeared with Cropp at conferences, publicly forgiving him and speaking out about the injustice of jailing an individual for a systemic error.[vii] Dr. Lucian Leape, a world-renowned patient safety expert, said, “I think criminalization is a terrible thing. In every case, there were obvious explanations for why a mistake happened and those explanations have to do with the systems they were working in and the institutions that were responsible for those situations.”[viii]

When multiple people, in parallel or sequentially, have responsibility for inspecting for a defect, a normal human reaction is to get complacent, to think that the other person will catch an error if you miss it. Or, it is easy to “see” what we expect to see (the lack of an error) instead of what is actually in front of us. At one health system’s billing department, up to five different employees inspected charts to make sure details, such as the ordering physician’s name, were correct. In this case, even with all of the inspections, errors were frequently found at the final inspection step and sometimes got out as erroneous payment submissions.

Types of Error-Proofing

Error-proofing can be defined as the creation of devices or methods that either prevent defects or inexpensively and automatically inspect the outcomes of a process each time to determine whether the quality is acceptable or defective. Error-proofing is not a specific technology. Rather, it is a mindset and an approach that requires creativity among those who design equipment, design processes, or manage processes.

Make It Impossible to Create the Error

Ideally, error-proofing should be 100% effective in physically preventing an error from occurring. In everyday life, think about gasoline pump nozzles. Diesel nozzles are larger and do not fit into an unleaded gas vehicle. It is impossible to make that error. The error-proofing is more effective than if we only relied on signs or warning labels that said:

“Be careful; do not use diesel fuel in this vehicle.”

This error-proofing method does not, however, prevent the opposite error of using unleaded fuel in a diesel vehicle.

Corrected from the book text: Putting unleaded fuel into a diesel engine is MORE harmful than putting diesel into an unleaded vehicle. There’s an interesting history about why the more harmful mistake isn’t mistake-proofed. When unleaded fuel was introduced, the nozzle for it was made smaller to prevent a driver from putting leaded fuel into an unleaded vehicle. When leaded fuel was phased out, those nozzles were used for diesel.

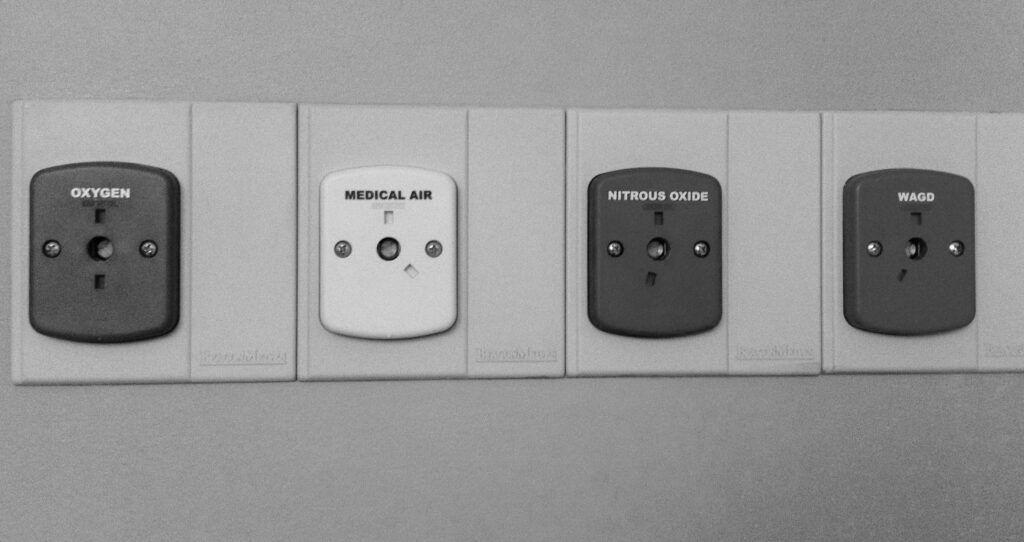

In a hospital, we can find examples of 100% error-proofing. One possible hospital error, similar to the gas pumps, is connecting a gas line to the wrong connector on the wall. Many regulators and gas lines have pins and indexing that prevent a user from connecting to the wrong line, medical air instead of oxygen, as shown in Figure 8.2. The different pins are more effective than color coding alone would have been. The connectors will not fit, and there is no way to circumvent the system to make them fit.[ix]

One type of preventable error is the injection of the wrong solution into the wrong IV line, an error that has been reported at least 1,200 times over a five-year period, meaning it has likely occurred far more than that, given the common under-reporting of errors.[x] Instead of telling clinicians just to be careful, some hospitals have switched to IV tubing that physically cannot be connected to feeding syringes—a strong form of error-proofing, similar to the gas line error-proofing. Again, a physical device that prevents an incorrect connection is more effective than simply color-coding the lines, as some hospitals have done.

In 2006, a Wisconsin nurse, Julie Thao, was convicted and imprisoned after mistakenly injecting an epidural, instead of an antibiotic, into the bloodstream of a 16-year-old expectant mother. The patient’s death “might have been avoided if containers, tubing, and connectors for epidural medications were vastly different from intravenous medications,” said a newsletter from the Institute for Safe Medication Practices. In fact, the Joint Commission had put out a bulletin in early 2006 warning of such line risks, yet a patient died, and an experienced nurse ended up in jail.[xi]

Make It Harder to Create the Error

It is not always possible to fully error-proof a process, so we can also aim to make it harder for errors to occur. Think about the software we use every day to create word-processing documents (or e-mail). One possible error is that the user accidentally clicks on the “close” button, which would lose the work. Most software requires a confirmation step, a box that pops up and asks, “Are you sure?” It is still possible for a user to accidentally click “yes,” but the error is less likely to occur. A better, more complete error-proofing is software that continuously saves your work as a draft, preventing or severely minimizing data loss. Another type of error is forgetting to attach a file before hitting the send button. There are software options that look for the word “attached” or “attachment,” creating a warning message for the user if one tries to hit send without a file being attached.

In hospitals, a certain type of infusion pump has a known issue that makes it too easy for a data entry error to occur—it even has its own name, the double-key bounce. The keypad often mistakenly registers a double-digit, so if a nurse enters 36, it might be taken as 366 and could lead to an overdose.[xii] Some hospitals have posted signs asking employees to be careful. A better approach might be a change to the software that asks “Are you sure?” and requires an active response when double numbers are found or when a dose above a certain value is inadvertently entered. This approach would still not be as effective as a redesign or an approach that absolutely prevents that error from occurring. Such a change also would not prevent the mistaken entry of 90 instead of 9.0, highlighting the need for multiple countermeasures to address complex problems.

Hospitals have already made some efforts to error-proof medication administration, as medication errors are a common cause of harm to patients; yet some experts estimate there is still, on average, one medication error per day for each hospital patient.[xiii] Automated storage cabinets are one error-proofing method that many hospitals have implemented to help ensure that nurses take the correct medications for their patients. With these cabinets, nurses must scan a bar code or enter a code to indicate the patient for whom they are taking medications. The computer-controlled cabinet opens just the drawer (and sometimes just the individual bin) that contains the correct medication. This makes it harder to take the wrong drug, but there are still errors that can occur:

- The nurse can reach into the wrong bin in the correct drawer (with some systems).

- The pharmacy tech might have loaded the wrong medication into the bin.

- The wrong medication might be in the correct package that the pharmacy tech loaded into the correct bin.

- The correct medication might still be given to the wrong patient after being taken from the cabinet.

When error-proofing, we must avoid complacency and overreliance on a particular device. Properly error-proofing the entire process requires wider analysis and error-proofing methods at each step.

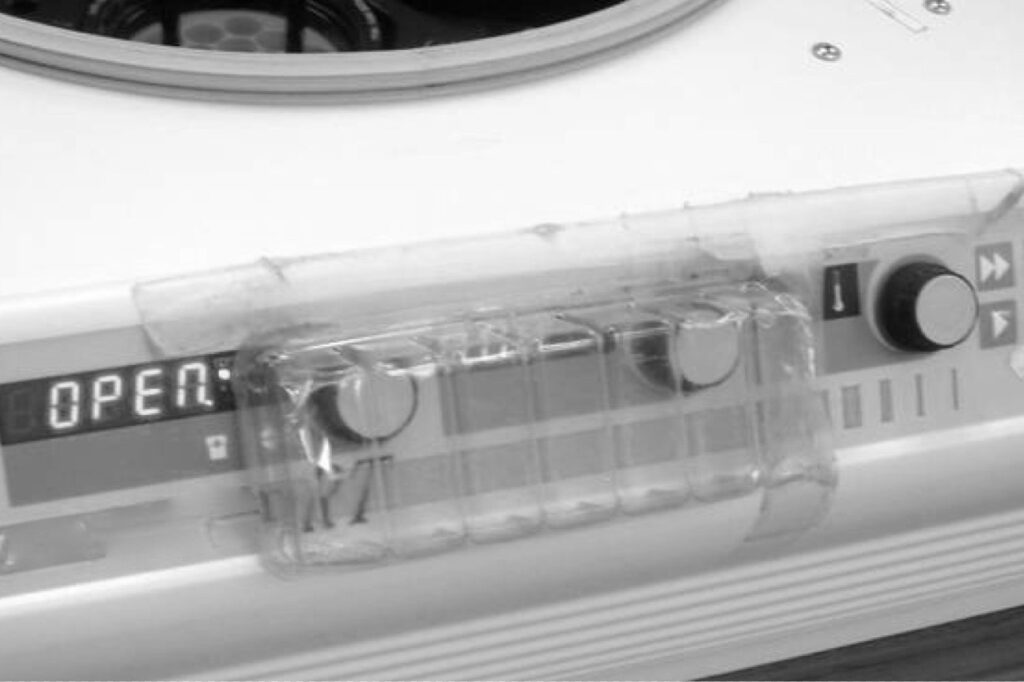

Often, the workplace can be retrofitted with simple, inexpensive error-proofing devices if we have the mindset of prevention. One laboratory implemented two simple error-proofing devices in a short time. In one area, a centrifuge had knobs that controlled timing and speed, knobs that were too easily bumped by a person walking by the machine. Instead of hanging a “be careful” sign, a technologist took a piece of clear plastic packaging material that would have otherwise been thrown away and put it over the knobs, as shown in Figure 8.3. The prevention method was effective, cost nothing, and was probably faster than making a sign. The mindset was in place to figure out how that error could be prevented.

In the microbiology area, visitors dropped off specimens on a counter and were often tempted to reach across the counter rather than walk to the end, where the official drop-off spot was located. The problem with reaching across the counter is that a person unfamiliar with the area could have easily burned himself or herself on a small incinerator that sat on the counter without an indication that it was hot. Instead of posting warning signs, a manager had maintenance install a plexiglass shield that prevented people from reaching across the bench, as shown in Figure 8.4. It was a small investment but helped prevent injury better than a sign.

Pharmacies often have signs warning personnel about high-risk medications, for which similar names or different doses can cause harm to patients. Many pharmacies are rethinking the practice of placing medications in alphabetical order on shelves in an effort to prevent some errors. In one case, UPMC St. Margaret reduced re-admission rates for chronic obstructive pulmonary disease (COPD) by 48% by moving different doses of a drug that is commonly used with these patients. Instead of the 0.63- and 1.25-milligram doses being side by side, they were placed in different drawers with distinctive labels.[xiv]

Make It Obvious the Error Has Occurred

Another error-proofing approach is to make it obvious when errors have occurred, through automated checks or simple inspection steps. The early Toyota weaving loom did not prevent the thread from breaking but quickly detected the problem, stopping the machine and preventing the bad fabric from being produced.

One possible error in instrument sterilization is equipment malfunctions or misuse resulting in an instrument pack not being properly sterilized. Multiple methods might be used for error-proofing the equipment or its use, but common practice also uses special tape wraps or inserts that change colors or form black stripes to indicate proper sterilization. The absence of these visual indicators makes it obvious an error has occurred, helping protect the patient.

In a hospital setting, there is a risk that intubation tubes are inserted into the esophagus instead of the trachea, which would cause harm by preventing air from getting to the patient’s lungs. A 1998 study indicated this happens in 5.4% of intubations.[xv] Another study suggested that “unrecognized esophageal intubations” occurred in 2% of cases, meaning the error was not detected or reversed quickly. Warning signs posted on the device or in the emergency department would not be an effective error-proofing strategy. If we cannot engineer the device to ensure the tube only goes into the airway, we can perform a simple test after each insertion. Plastic aspiration bulbs can be provided so the caregiver can see if air from the patient’s lungs will reinflate a squeezed bulb within five seconds.[xvi] If not, we can suspect that the tube is in the esophagus, looking to correct the error before the patient is harmed. This form of error-proofing is not 100% reliable because it relies on people to adhere to the standardized work that they should check for proper placement.

Other error-proofing might include monitors and sensors that can automatically detect and signal the anesthesiologist that the patient was intubated incorrectly. However, monitoring oxygen saturation levels as an inspection step for this error has limitations. A chest X-ray might also be used as an inspection method, but studies have shown that it is ineffective.[xvii] “Commonly used” clinical inspection methods, in this case, are often inaccurate, so medical evidence should drive the standardized work for how clinicians check for intubation errors.

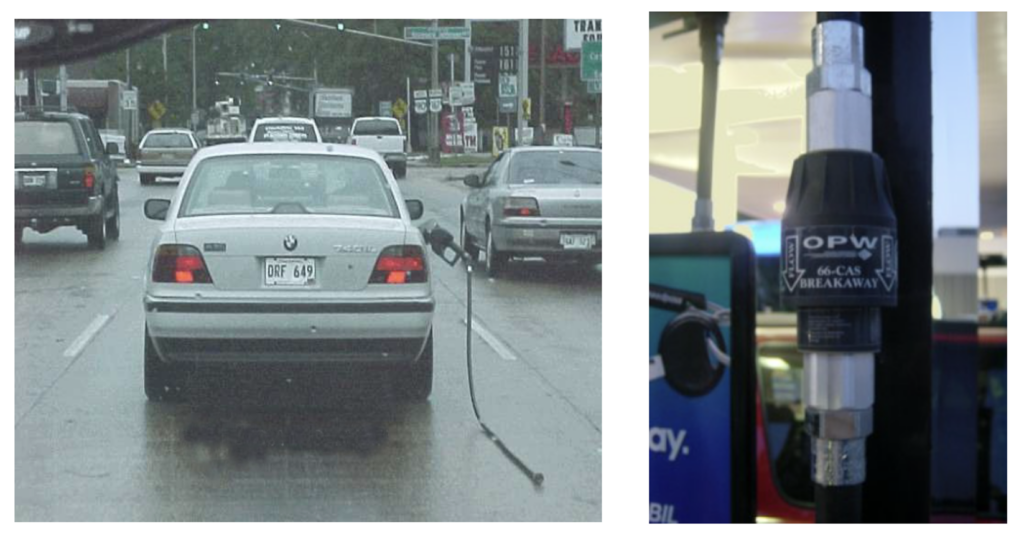

Make the System Robust So It Tolerates the Error

At gas stations, there is a risk that a customer could drive away without detaching the pump nozzle from the car. They have been able to error-proof this not by physically preventing the error or by hanging signs; instead, gas stations have anticipated that the error could occur and designed the system to be robust and allow for this. If a driver does drive off, the pump has a quick-release valve that snaps away and cuts off the flow of gasoline, preventing a spill or possible explosion (although you might look silly driving down the road with the nozzle and hose dragging from your vehicle).

In a laboratory, one hospital found a test instrument that was not designed to these error-proofing standards as the instrument was not robust against spills of patient specimens. The lab responded by posting two separate signs on the instrument that told employees:

“Do Not Spill; Wipe Spills Immediately.”

The signs did nothing to prevent the error as employees generally tried not to spill patient specimens. The root cause of the situation was that a circuit board in the instrument was exposed underneath the place where specimens were loaded. A different hospital using that same equipment posted a memo notifying staff that they had “blown three electronic boards in just a few weeks,” which cost $1,000 each. The memo emphasized that people should not pour off specimens or handle liquids above the equipment as part of their standardized work. As a better root cause preventive measure, the designer of the instrument should have anticipated that patient specimen spills were likely to occur at some point in the instrument’s use and taken steps to protect the sensitive board.

One lesson for hospitals is to consider design and error-proofing, even going through an FMEA exercise, when buying new equipment. Hospitals can pressure manufacturers and suppliers to build error-proofing devices into equipment, using their market power to reward suppliers who make equipment that is more robust against foreseeable errors.

Error-Proofing, Not Dummy-Proofing

Error-proofing has a Japanese equivalent term, poka yoke, which is sometimes used in Lean circles. With this phrase, the focus is on the error itself, and our problem-solving response is to understand and prevent the error. The phrase mistake-proofing is sometimes used interchangeably with error-proofing, but that phrase seems to steer focus toward who made the mistake and blame. Errors occur, often as the result of a system, in which a mistake is defined as “a wrong action attributable to bad judgment or ignorance or inattention.”[xviii] Not all errors are necessarily caused by bad judgment, ignorance, or inattention.

Everyday use of the English language features phrases such as “idiot-proofing” or “dummy-proofing.” Terminology like this should not be used, as it does not demonstrate respect for people. Errors, particularly in hospital settings, are not caused because people are stupid. Errors are caused by smart people who mean well but are forced to work in complex and often broken systems.

There is another Japanese phrase, baka yoke, which translates to “fool-proofing.” Shingo told a story about using the baka yoke phrase in a factory in 1963. Shingo wrote, “One of the company’s part-time employees burst into tears when the department head explained that a ‘foolproofing’ mechanism had been installed because workers sometimes mixed up left- and right-hand parts. ‘Have I really been such a fool?’ the employee sobbed.”[xix]

As much as some of us might not want to admit it, we are all human, even in a medical environment, and we are prone to committing errors and mistakes. Systems need to be designed and error-proofed accordingly.

Examples of Error-Proofing in Hospitals

Many error-proofing methods already exist in hospitals, with varying degrees of effectiveness. Many of the examples we see involve error-proofing through standardized work, which is more likely to reduce errors than prevent them altogether.

Banned Abbreviations as Error-Proofing

Many hospitals have implemented lists of banned handwritten abbreviations that have the potential to be confusing or misinterpreted. Rather than telling doctors and employees to be careful, hospitals have recognized there is a risk from using the old abbreviations.

For example, micrograms and milligrams can be confused when micrograms is written by hand and abbreviated with the Greek letter for micro, leading to an incorrect dose being given to a patient. Rather than telling doctors and employees to be careful, hospitals have recognized there is a risk from using the old abbreviations. The preferred abbreviation is to designate micrograms as “mcg,” which is much harder to confuse with milligrams (“mg”) when written by hand. In addition, the use of the letter U for units can be mistaken for a zero, leading to a dosing error.

The challenge with this form of error-proofing is that we are relying on individuals to follow the new standardized work. It is not enough to say, “We have a policy in place, so the problem is solved.” Leaders must constantly check for adherence to the standardized work and need to encourage employees to monitor each other for the proper use of abbreviations.

In a Lean hospital, when the old abbreviation is found, we cannot just work around the problem by fixing the abbreviation (as might be done by a nurse or a unit clerk). Employees must feel obligated to address the process defect (somebody using the banned abbreviation) with the physician or report the practice to managers, using something like the “patient safety alert” process at Virginia Mason Medical Center (Seattle, WA).[xx] Unfortunately, a survey by the American Association of Critical-Care Nurses showed that fewer than 10% of physicians, nurses, and other critical care providers directly confronted their colleagues, and 20% of physicians had seen patient harm as a result. Leaders have to create an environment in which raising a patient safety concern does not lead to punishment or retribution, which is one reason caregivers would avoid confronting each other. Hospitals might also want to consider providing training in constructive confrontation and communication skills to help employees work together more effectively. Teaching healthcare workers the most effective way to speak up is one aspect of the increasingly popular “crew resource management” (CRM) training that brings aviation and cockpit safety practices into healthcare.[xxi]

In the CRM approach, workers are not “encouraged” or “empowered” to speak up: They are expected to speak up. At Allegheny General Hospital in Pennsylvania, where central line insertion and care were 100% standardized, the chief of medicine, Dr. Richard Shannon, instructed the nurses to stop a nonstandard procedure. Until this time, this was not part of the hospital culture. Ultimately, the nurses did stop procedures that were being done incorrectly, and Dr. Shannon backed them up. The result was a change in culture, more respect for nurses as team members, and drastically reduced central-line infections.[xxii]

Leaders must follow up with physicians and remind them of the importance of using the approved abbreviations, emphasizing the impact on patient safety (focusing on the customer) and, secondarily, the risk to the hospital if an error were to occur. It can be a difficult dynamic when we are reliant on keeping physicians happy to drive new patient revenue to the hospital. In cases like this, hospitals in a community should reach common agreements that they are going to hold all physicians accountable, equally, to standardized work and patient safety guidelines, reducing the risk that a physician takes his or her patients to a competing hospital.

Computer Systems as Error-Proofing

One technological method for reducing handwriting errors for pharmacy prescriptions, laboratory test orders, or other communications is electronic systems, such as computerized physician order entry (CPOE) or other electronic medical record (EMR) systems. The Leapfrog Group estimates that if CPOE were implemented at all urban hospitals in the United States, as many as three million serious medication errors could be avoided each year.[xxiii] By 2010, only 14% of hospitals had at least 10% CPOE use, the minimum required for so-called meaningful use standards.[xxiv] But, as a result of federal stimulus efforts, 72% of hospitals had CPOE by 2012.[xxv] A study at Brigham and Women’s Hospital (Boston) showed that CPOE adoption reduced error rates by 55%, and serious errors fell by 88%.[xxvi]

Even at hospitals with CPOE, physician resistance is often high, particularly when systems are not designed effectively around physician workflow. As with nurses not wanting to use mobile computer carts, physicians might resist using CPOE or EMR systems if the technology slows them down. The situation is complicated by dynamics between physicians and hospitals, where most physicians are not direct employees. This eliminates the ability of management to mandate the use of the system through traditional supervisor control. Of course, as discussed in Chapter 5, the Lean management style relies more on explaining why and convincing people of the benefits of a tool or method instead of relying on formal positional authority. When physicians or others resist standardized work or new tools, we should first ask why.

The former Toyota executive Gary Convis is often quoted as being told by Japanese Toyota leaders to “lead the organization as if I had no power.”[xxvii] Although Convis had much formal power (with thousands of employees reporting to him), relying on mandates was a last resort, after first using coaching, setting an example, and understanding others to help them achieve their goals. This leadership style should be particularly transferable to a hospital environment in which senior leaders have little or no formal control over physicians.

In a further connection between quality and efficiency, CPOE and other hospital information systems provide automated checks of pharmacy prescriptions, inspecting for interactions and patient allergies, among other process defects. This reduces the amount of time required of pharmacists for manual order review and inspection, freeing them to serve more of a clinical advisory role to physicians and patients.

Alerts sound like a good solution, but some studies show “alerts are only modestly effective at best.”[xxviii] EMR and CPOE systems also introduce the risk of alarm fatigue if systems present too many warnings deemed to be incorrect or unimportant by clinicians. Experts recommend improvements such as ensuring that inconsequential alerts are eliminated, alerts are tailored to patient characteristics, and that only severe alerts interrupt workflows.[xxix]

Dr. Bob Wachter of UCSF Medical Center (San Francisco) wrote about how new residents were told by senior residents to “ignore the alerts” by just clicking through them.[xxx] This, among other problems, led to a pediatric patient mistakenly receiving 38 times the normal dose of a medication. Pharmacists and nurses along the way either ignored warnings or were too trusting of automation and technology that gave the appearance of being error-proofed. Scanning the bar codes on 38 times too many pills only ensures that the wrong dose is being given to the right patient. Technology can help, but it must be part of a holistic system design.

Case Example: Best Practices vs. PDSA

While Lean emphasizes a structured, controlled, and experimental method for solving problems and testing new methods (PDSA), some question whether hospitals need only find and adopt proven “best practices” recommended by other health systems, clinical associations, or government bodies.

A hospital had a patient fall that, sadly, resulted in a fatal injury. One of the nurses involved reviewed three years’ worth of charts of patients who had fallen. She looked for commonality in the patients and their conditions and developed a scale and a paper form that could be used to evaluate patients for the risk of falling, allowing caregivers to better prevent falls. When presented with that option, senior leaders told staff to implement and use the Hendrich II fall risk scale instead, as that is considered to be a best practice.

What happened? The number of falls actually increased, even with that protocol being used. Senior leaders were frustrated with the results, but staff pushed back and reminded them they chose the scale. Staff were allowed to try their own form, which was actually more prescriptive and more standardized. What happened then? Falls were reduced significantly. The team continued to improve their scale and protocol, adding checks with CNAs, leading to even better results.

Then, there was a patient fall. The hospital CEO came and personally ran a 10-hour root cause analysis meeting. The chief nursing officer said that “started the healing process,” as “everything the CEO said gave them confidence they had done everything they could,” and that the protocol had been followed. However, there had been an unseen possibility, which gave them an opportunity to update the scale for future use. [/CALLOUT]

Preventing Surgery Errors through Error-Proofing

While not the most frequently occurring medical mistake, wrong-site surgeries can severely impact patients and create high-profile cases in the news. One study estimated that a typical large hospital would have a serious surgical incident every 5–10 years.[xxxi] Mistakes like these can be error-proofed through standardized work (including checklists) and visual management methods, as they are often caused by poor communication, time pressures, and organizational dynamics that prevent people from speaking up.

In July 2003, the Joint Commission released its “Universal Protocol for Preventing Wrong Site, Wrong Procedure, and Wrong Person Surgery,” aimed at putting standardized work methods in place for accredited hospitals. The three major components of the protocol are

- A formal process for presurgical verification

- Marking the operative site

- A time-out process is done immediately before the procedure

Since this is a standardized work approach to error-proofing, the success of the method mainly depends on consistently following the standard. The Joint Commission stated that time-out compliance is only at 78–80%,[xxxii] a slight increase from the 2007 report of 74.2%.[xxxiii] Nurses and other employees are often afraid to speak up with certain surgeons, so leaders have an important role in creating an environment in which fear does not prevent the time-out from taking place.

Guidelines include the person performing the procedure marking the site in an unambiguous way (such as “YES”) and making sure the mark remains visible after skin prep. An “X” (a commonly used mark) might be interpreted as “cut here” or “wrong spot,” an ambiguity that could lead to an error. Compliance with this protocol is higher, at 93.4%. Leaders need to ensure that surgeons are not just following the letter of the law, as one news account suggested some surgeons make what one surgical chief called “passive-aggressive marks,” tiny marks that cannot be seen and cannot be helpful.[xxxiv]

As with any example of standardized work, leaders have an obligation to look for signs that methods like the universal protocol are not being followed. We need to do so proactively, rather than assuming the standardized work is being followed and just reacting after an error occurs. Inspections and audits can be done respectfully, but send a message that patient safety must always be the top priority. Leaders can also continually emphasize the importance of taking time for quality when employees are concerned about moving patients through operating rooms as quickly as possible.

Stopping the Line (Andon)

A method frequently associated with Toyota is the andon, cords or buttons near the assembly line that are activated when any worker sees a problem. Lights blink, and chimes sound. Within seconds, a team leader appears to help the production associate. The line will stop if additional time is needed to solve the problem before the car moves on to the next station. In more than 99% of cases, the problem is solved quickly without stopping production.[xxxv] Problems are generally fixed at the source (the concept of jidoka) instead of being passed along to be fixed at the end of the line.

In a hospital setting, we can teach the andon concept, the idea that any process should be stopped at the sign of a problem (or problems should be solved immediately if possible). The time-out process is one example, but ThedaCare (Wisconsin) took the idea of stopping the line even further, in the name of patient safety.

In 2004, ThedaCare had gone nine years without a wrong-site surgery before one occurred. Then, they soon had three more, for a total of four errors in eight weeks. When she learned about the errors, hospital president President Kathryn Correia decided that the operating rooms would be shut down until the root cause of the problem had been found—stopping “the line.” ThedaCare’s error, according to former CEO Dr. John Toussaint, was that they had not been able to stop the line immediately after the first error, taking time to find the root cause right then. Being early in their Lean journey, there were “no metrics defined,” and errors “slipped under the radar” and were not brought to the attention of leaders.[xxxvi] As ThedaCare investigated the errors, it was discovered that surgical teams had stopped doing the time-out procedure, another warning that we cannot let success (good results) lead to complacency (falling back to bad processes). As a temporary fix, the hospital added an independent auditor to be present at each presurgical time-out, with random audits taking place long-term.

Understanding Lean is one thing; implementing it in the face of an existing culture can be another story altogether. For example, Ford Motor Company’s new truck plant (Dearborn, MI) copied a tool, installing andon cords. Workers, though, were afraid to pull them, expecting a negative response from their supervisors instead of a supportive or helpful one.

Why do Toyota employees pull the cord 2,000 times per week, while Ford employees only do so twice a week at this one plant?[xxxvii] It is not because Toyota has 1,000 times the problems. Toyota has created a culture in which it is acceptable, even encouraged, to report problems instead of covering them up. At the Ford plant, the infrequent use of the andon cords was attributed to “the legacy of generations of mistrust between shop-floor workers and managers.”[xxxviii]

The equivalent of andon cord pulls, or line stoppages in a hospital setting should be immediate and direct communications. At some hospitals, the mechanisms for identifying problems include an e-mail account and a phone line (that sometimes might go to voice mail). While it is good to have avenues for employees to report safety concerns, a true andon system requires people to stop and address the problem (or at least ask a question) immediately, so that patient harm can be prevented. Again, leaders have a responsibility to build a culture in which employees are not afraid to speak up without retribution from colleagues.

Edit: That’s now referred to as “Psychological Safety”

One hospital described a “potential incident” that resulted from a physician ordering Coumadin for a patient who had very low hemoglobin. A nurse noticed that potentially unsafe condition and asked, “Are you aware of the bleeding time test result?” so the physician said, “Hold off” on the medication. In a Lean culture, staff need to feel free to speak up, even if it turns out they are incorrect about a potential risk. If the physician had not responded appropriately, the nurse would need to have a way to escalate the concern.

Virginia Mason Medical Center has been a leader in the use of the andon philosophy, calling them “patient safety alerts,” or PSAs.[xxxix] PSAs are reviewed by a specialist and categorized as:

- Red (1%): Life-threatening situations, a “never event,” or anything that could pose serious harm to a patient

- Orange (8%): Less-severe situations

- Yellow: Slips or latent errors (situations that carry the potential for patient harm)

Beyond the mechanisms for reporting problems, the leadership team at Virginia Mason has emphasized that they have “got your back” if you are a staff member who raises a concern, reducing the fear of retribution. By 2004, an estimated one-third of employees had reported a PSA, and the hospital generally sees 800 to 1,000 reports each month. Jamie Leviton, manager of patient safety, says, “More PSAs are not an indication that we are less safe but that our teams feel safer to report—which is the ultimate goal.”[xl]

The hospital attributed staff acceptance of this system to the fast response that people received when calling an alert and the cultural changes that have surrounded this system. At Virginia Mason, problems, falls, and near misses lead to systemic improvement, not punishment.

As a result of their safety improvement efforts, the professional liability costs at Virginia Mason fell by 26% from 2007 to 2008, and they declined another 12% the next year.[xli] As a result of their quality and safety improvement, Virginia Mason was named one of two “Hospitals of the Decade” by the Leapfrog Group.[xlii]

Error-Proofing the Error-Proofing

When implementing error-proofing methods, we have to take care that employees do not have easy ways of working around the new system. One example of this, from outside the hospital, was shared by an employee who had observed a neighbor who used an electric lawn mower with an error-proofing device. The user had to press a lever together to keep the engine running. As a safety measure, releasing that lever stopped the mower engine and blade. But, in an effort to save motion, the neighbor circumvented the protection by wrapping the electric cord around the lever so it would stay engaged without having to hold the lever, creating a safety problem and risk of injury.

We should keep that example in mind when looking at error-proofing methods, particularly when the method creates extra work for employees. If employees are overburdened and already have too much other waste in their day, they may resist having the extra work or look for ways to cut corners to save time. We need to anticipate how employees might circumvent the error-proofing by applying the same thought process that went into the original error-proofing itself:

- How can we prevent the circumvention of error-proofing?

- Why do people feel the need to circumvent error-proofing?

- How can we make it harder to circumvent error-proofing?

- How can we make it obvious or apparent that the error-proofing has been circumvented?

Leaders must watch for the proper use of error-proofing methods and hold people accountable for following the correct standardized work. At the same time, we should first ask why, as in “Why would that employee want to circumvent the error-proofing?” We have to look for the root cause of why that person might do so, either because there is not enough time in his or her day (a need to reduce waste) or because the error-proofing method is too hard to use.

In some hospitals, nurses are required to scan bar codes on patient wristbands to ensure the proper match of patient to medication. If nurses call in sick and a unit is understaffed, the nurses on duty might feel pressured to cut corners to save time. Nurses sometimes create surrogate patient wristband labels to scan all of the medications at once (in a batch) instead of doing it at the bedside. This corner-cutting is a workaround to the problem of being understaffed. Lean hospitals would hold nurses accountable but would also look to solve the root cause of the problem—the pressure and the need to cut corners. A five whys analysis could be performed to identify weaknesses in staffing policies and reaction plans for when nurses call in sick.

Many anatomic pathology labs now utilize barcode labels on “blocks” with processed patient tissue. As technologists cut blocks to create slides to be read by pathologists, scanning the labels on the block and the slide can flag mismatches and errors of the type that caused the Darrie Eason case from Chapter 7. But, as with nurses, the error-proofing technology can be circumvented if technologists are not disciplined about only keeping one patient’s specimen and slides in their field of work at any given time.

Conclusion

It’s easy for a hospital to say, “Patient safety is always our top priority, but is that really the case every day? Hospitals are full of smart, conscientious employees, yet errors and patient harm still occur. If telling people to be careful were effective, we would have already eliminated quality and patient safety problems in our hospitals. Error-proofing is a mindset that we have to adopt, always asking why an error could have occurred and what we can do to prevent that error from occurring the next time. In keeping with the principle of respect for people, Lean thinkers assume that people want to do safe, high-quality work; we just have to design a culture with systems and processes that allow that to happen.

Lean Lessons

Being careful and hanging warning signs is not enough to prevent errors.

A 100% inspection is not 100% effective.

Adding more inspection steps will not ensure quality outcomes.

Error-proofing (poka yoke) physically prevents errors from occurring or makes them less likely to occur.

Avoid phrases like “dummy-proofing” to keep with the principle of respect for people.

Do not forget to error-proof the error-proofing.

Points for Group Discussion

Are the caution and warning signs in your hospital anything more than a short-term response to quality problems?

Can you find a sign that can be replaced with better standardized work or with error-proofing?

How would you evaluate your equipment and tools differently, keeping error-proofing in mind?

How should leaders react if pre-surgical timeouts are not taking place?

What are frequently occurring errors and mistakes in our area?

What ideas for simple error-proofing do our team members have?

How do we ensure that methods like banned abbreviations are followed all of the time?

How can hospitals better learn from mistakes made at other facilities?

In what circumstances would it be appropriate for society to jail individuals who are involved in errors that harm patients?

Can you recall a time when patient safety was made a top priority, even if that wasn’t a popular decision or if it cost more in the short term? How can you celebrate stories like that in the organization?

[i] Toyota Motor Corporation, “Toyota Production System,” http://www.toyota-global.com/company/vision_philosophy/toyota_production_system/ (accessed March 26, 2015).

[ii] Toyota Motor Corporation, “Toyota Production System,” http://www.toyota-global.com/company/vision_philosophy/toyota_production_system/ (accessed March 26, 2015).

[iii] Kowalczyk, Liz, “R.I. Raps Hospital for Errors in Surgery,” Boston Globe, November 27, 2007, http://www.boston.com/news/local/articles/2007/11/27/ri_raps_hospital_for_errors_in_surgery/ (accessed March 26, 2015).

[iv] Brownstein, Joseph, “Rhode Island Hospital Fined for Fifth Surgery Error in Two Years,” http://abcnews.go.com/Health/rhode-island-hospital-fined-surgery-error-years/story?id=8988619 (accessed October 16, 2015.

[v] Atassi, Leila, “Former Pharmacist Eric Cropp Gets 6 Months in Jail in Emily Jerry’s Death from Wrong Chemotherapy Solution,” http://blog.cleveland.com/metro/2009/08/_former_pharmacist_eric_cropp.html (accessed April 6, 2015).

[vi] Institute for Safe Medication Practices, “AN INJUSTICE HAS BEEN DONE: JAIL TIME GIVEN TO PHARMACIST WHO MADE AN ERROR,”

http://www.ismp.org/pressroom/injustice-jailtime-for-pharmacist.asp (accessed October 16, 2015).

[vii] Emily Jerry Foundation, ” urfing the Healthcare Tsunami: Chris Jerry & Eric Cropp Unseen Footage,” https://www.youtube.com/watch?v=MseId9FcVOI (accessed October 16, 2015).

[viii] Emily Jerry Foundation.

[ix] Grout, John, Mistake-Proofing the Design of Healthcare Care Processes, AHRQ Publication 07-0020 (Rockville, MD: Agency for Healthcare Research and Quality, 2007), 41, 76.

[x] Wahlberg, David, “Day 1: Medical Tubing Mistakes Can Be Deadly,” Wisconsin State Journal, http://host.madison.com/news/local/health_med_fit/day-medical-tubing-mistakes-can-be-deadly/article_bc888491-1f7d-5008-be21-31009a4b253a.html (accessed April 6, 2015).

[xi] Joint Commission, “Tubing Misconnections—A Persistent and Potentially Deadly Occurrence,” http://www.jointcommission.org/sentinel_event_alert_issue_36_tubing_misconnections%E2%80%94a_persistent_and_potentially_deadly_occurrence/ (accessed April 6, 2015

[xii] “Double Key Bounce and Double Keying Errors,” Institute for Safe Medication Practices, January 12, 2006, http://www.ismp.org/Newsletters/acutecare/articles/20060112.asp?ptr=y (accessed March 26, 2015).

[xiii] Anderson, Pamela and Terri Townsend, ” Medication errors: Don’t let them happen to you,” http://www.americannursetoday.com/assets/0/434/436/440/6276/6334/6350/6356/8b8dac76-6061-4521-8b43-d0928ef8de07.pdf (accessed October 16, 2015).

[xiv] California Healthcare Advocates, “Creative Interventions Reduce Hospital Readmissions for Medicare Beneficiaries,” October 7, 2010, http://www.cahealthadvocates.org/news/basics/2010/creative.html (accessed March 26, 2015).

[xv] Sakles, J. C., Laurin, E. G., Rantapaa, A. A., and Panacek, E., “Airway Management in the Emergency Department: A One-Year Study of 610 Tracheal Intubations,” Annals of Emergency Medicine, 1998, 31:325–32.

[xvi] Grout, Mistake-Proofing, 78.

[xvii] Dittrich, Kenneth C., MD, “Delayed Recognition of Esophageal Intubation,” CJEM Canadian Journal of Emergency Medical Care, 2002, 4, no. 1: 41–44. http://www.cjem-online.ca/v4/n1/p41 (accessed April 9, 2011).

[xviii] Vocabulary.com, “Mistake,” http://www.vocabulary.com/dictionary/mistake (accessed October 16, 2015).

[xix] Shingo, Shigeo, Zero Quality Control: Source Inspection and the Poka-Yoke System (New York: Productivity Press, 1986), 45.

[xx] Kenney, Charles, Transforming Healthcare: Virginia Mason Medical Center’s Pursuit of the Perfect Patient Experience (New York: Productivity Press, 2010), 50.

[xxi] Gaffney, F. Andrew, Stephen W. Harden, and Rhea Seddon, Crew Resource Management: The Flight Plan for Lasting Change in Patient Safety (Marblehead, MA: HCPro, 2005), 1.

[xxii] Grunden, Naida, The Pittsburgh Way to Efficient Healthcare: Improving Patient Care Using Toyota Based Methods (New York: Productivity Press, 2007), 53.

[xxiii] Leapfrog Group, ” Computerized Physician Order Entry,” http://www.leapfroggroup.org/for_hospitals/leapfrog_safety_practices/cpoe (accessed October 16, 2015).

[xxiv] Monegain, Bernie, “CPOE: Stumbling Block on Way to Meaningful Use,” Healthcare IT News, June 17, 2010, http://www.healthcareitnews.com/news/cpoe-stumbling-block-way-meaningful-use (accessed March 26, 2015).

[xxv] Wood, Debra, “EHR Adoption Report: The Latest Trends,” http://www.amnhealthcare.com/latest-healthcare-news/ehr-adoption-report-latest-trends/ (accessed October 16, 2015).

[xxvi] Leapfrog Group.

[xxvii] Convis, Gary, “Role of Management in a Lean Manufacturing Environment,” Society of Automotive Engineers, http://www.sae.org/manufacturing/lean/column/leanjul01.htm (accessed March 26, 2015).

[xxviii] PSNet, “Alarm Fatigue,” http://psnet.ahrq.gov/primer.aspx?primerID=28 (accessed October 16, 2015).

[xxix] PSNet.

[xxx] Wacher, Robert, MD, The Digital Doctor, (New York: McGraw-Hill Education, 2015), 135.

[xxxi] Kwann, Mary, David Studdert, Michael J. Zinner, and Atul A. Gawande, “Incidence, Patterns, and Prevention of Wrong-Site Surgery,” Archives of Surgery, 2006, 141: 353–58.

[xxxii] ISHN, “Time-out designed to avoid surgical mistakes being largely ignored,” http://www.ishn.com/articles/95150-time-out-designed-to-avoid-surgical-mistakes-being-largely-ignored (accessed October 16, 2015).

[xxxiii] Joint Commission, “Improving America’s Hospitals the Joint Commission’s Annual Report on Quality and Safety—2007,” http://www.jointcommission.org/Improving_Americas_Hospitals_The_Joint_Commissions_Annual_Report_on_Quality_and_Safety_-_2007/ (accessed April 6, 2015).

[xxxiv] Davis, Robert, “‘Wrong Site’ Surgeries on the Rise,” USA Today, April 17, 2006, http://usatoday30.usatoday.com/news/health/2006-04-17-wrong-surgery_x.htm (accessed April 6, 2015).

[xxxv] Meier, David, personal interview, January 2, 2008.

[xxxvi] Toussaint, John, and Roger Gerard, On the Mend: Revolutionizing Healthcare to Save Lives and Transform the Industry (Cambridge, MA: Lean Enterprise Institute, 2010), 88.

[xxxvii] Schifferes, Steve, “The Triumph of Lean Production,” BBC News Online, February 27, 2007, http://news.bbc.co.uk/2/hi/business/6346315.stm (accessed March 26, 2015).

[xxxviii] Ibid.

[xxxix] Kenney, Transforming Healthcare, 52.

[xl] National Patient Safety Foundation, “Environmental Factors That Contribute to Falls,” http://www.npsf.org/blogpost/1158873/Patient-Safety0-Blog?tag=&DGPCrSrt=&DGPCrPg=2 (accessed October 16, 2015).

[xli] Kenney, 65.

[xlii] The Leapfrog Group, “The Leapfrog Group Announces Top Hospitals of the Decade,” November 30, 2010, http://www.leapfroggroup.org/news/leapfrog_news/4784721 (accessed March 26, 2015).

Leave a Reply